Lecture 8. Suffix trees for NLP

Содержание

Text (collection) representation model

Vector Space Models

Vector Space Models, VSM [Salton, Wong, Yang, 1975] : every text is a vector in a space of terms. Usually is used along with cosine similarity measure.

Pro:

- Makes linear algebra operation and thus machine learning applicable

- Is simple and based on human intuition

Contra:

- The more the words are there in the the text, the higher is the cosinesimilarity

- Word order is lost

- Need some additional hacks to use bigrams and ngrams as terms

Applications:

- Information retrieval

- Topic, genre and maybe sentiment classification

- Extractive summarization

- Spam filtering

Generalized Vector Space Models

Generalized Vector Space Models, GVSM [Wong, Ziarko, Wong, 1985]: introduces a term to term correlation in the VSM.

Pro:

- Is still easy

- Synonyms or near synonyms are introduced into the model

- Term to term correlation may be computed in several ways: co-occurrences in the collection under consideration, co-occurrences in a large corpus, thesaurus-based relations

Contra:

- A lot of computations: if jVj = n (the number of terms), there are 2ndimensions in the GVSM

- Word order is lost

- Need some additional hacks to use bigrams and ngrams as terms

Applications: mainly information retrieval

Vector Space Model of Semantics

Vector Space Model of Semantics [Turney, Pantel, 2010]: terms are vector in the space of contexts (usually, also terms).

Pro:

- Is still easy

- Allows to find semantically similar words

- Very loved by scientific community

Contra:

- Word order is lost

- Need some additional hacks to use bigrams and ngrams as terms

Applications:

- Word similarity detection, clustering, classification

- Automatic thesaurus generation

- Word sense disambiguation

- Context-sensitive spelling correction

- Textual advertising

Language Model [Ponte, Croft, 1998]

Language Model [Ponte, Croft, 1998]: a probability distribution over sequences of words.

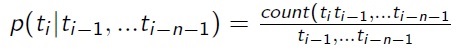

Unigram model: p(t 1 t 2 t 3 ) = p(t 1 )p(t 2 )p(t 3 )

Bigram model: p(t 1 t 2 t 3 ) = p(t 1 |BOS)p(t 2 |t 1 )p(t 3 |t 2 )p(EOS|t 3 )

Language Model [Ponte, Croft, 1998]: a probability distribution over sequences of words.

Pro:

- The word order is not lost

- Makes Markov chain theory applicable

Contra:

- The problem of unseen words

- How to choose n?

- Long relations between words or sentences (for example, anaphora) are lost

Applications:

- Information retrieval

- Text generation

- Abstractive summarization

- Speech recognition

- Speech generation

To sum up:

- VSM-like models: no word order, algebraic structures, classification of any kind, stemming and lemmatization for dimension reduction

- LM-like models: partially word order, Markov chains, generation of text and speech

- What if new word appears? What if there is a typo? What if stemming is not always correct? Use edit distance on words or stems or use symbol ngrams instead as terms or ...